Learn More

Learn More

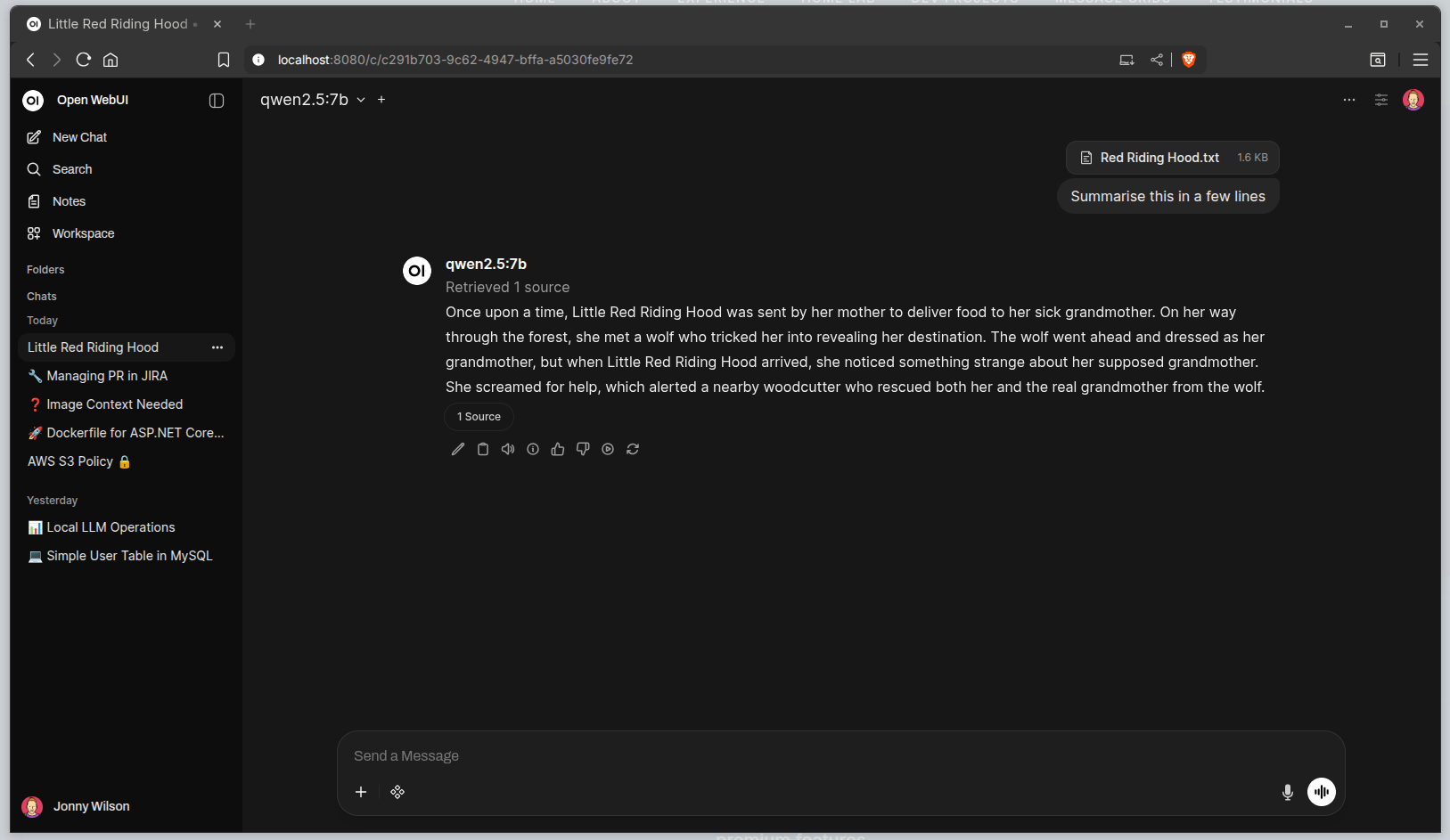

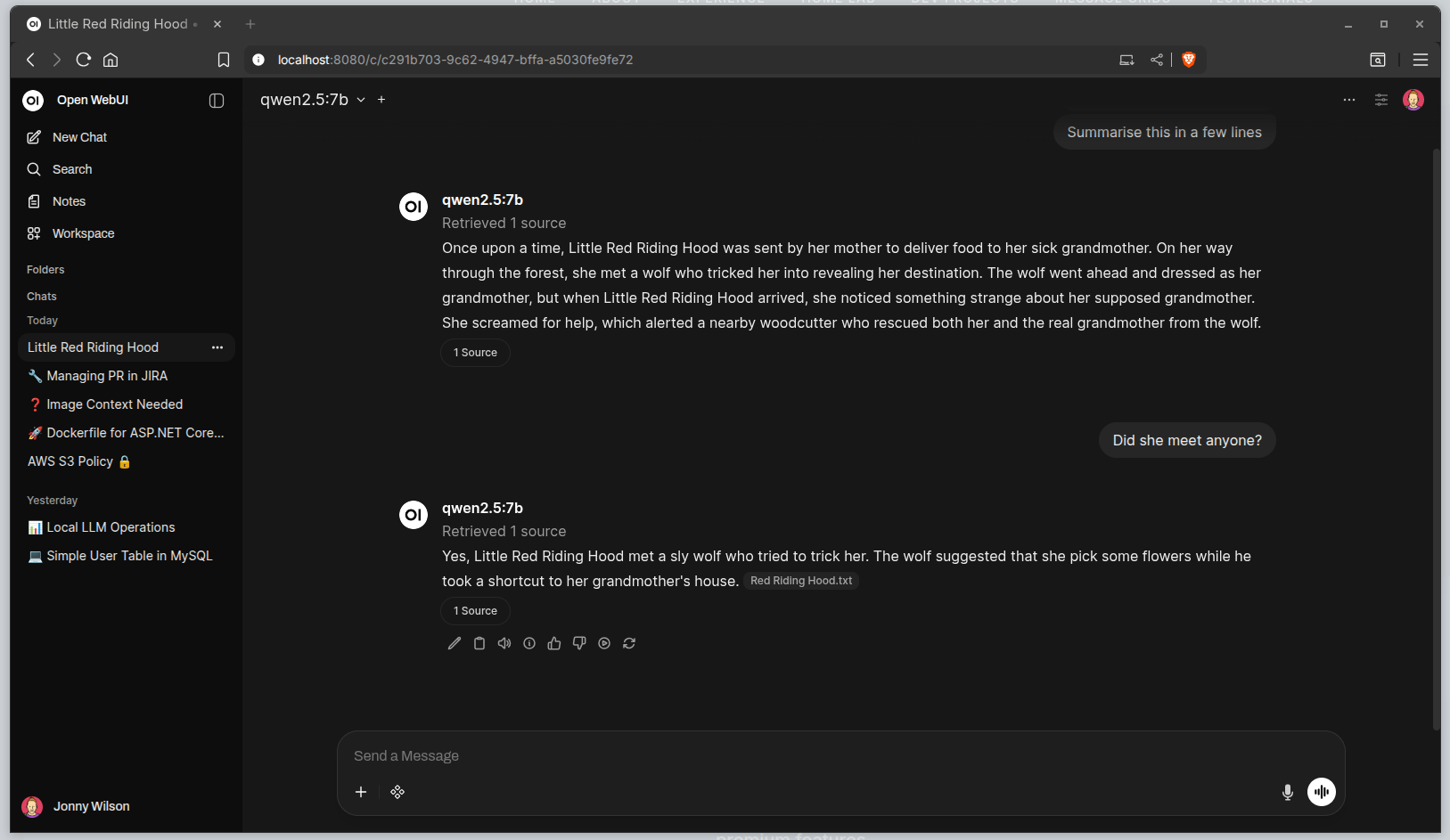

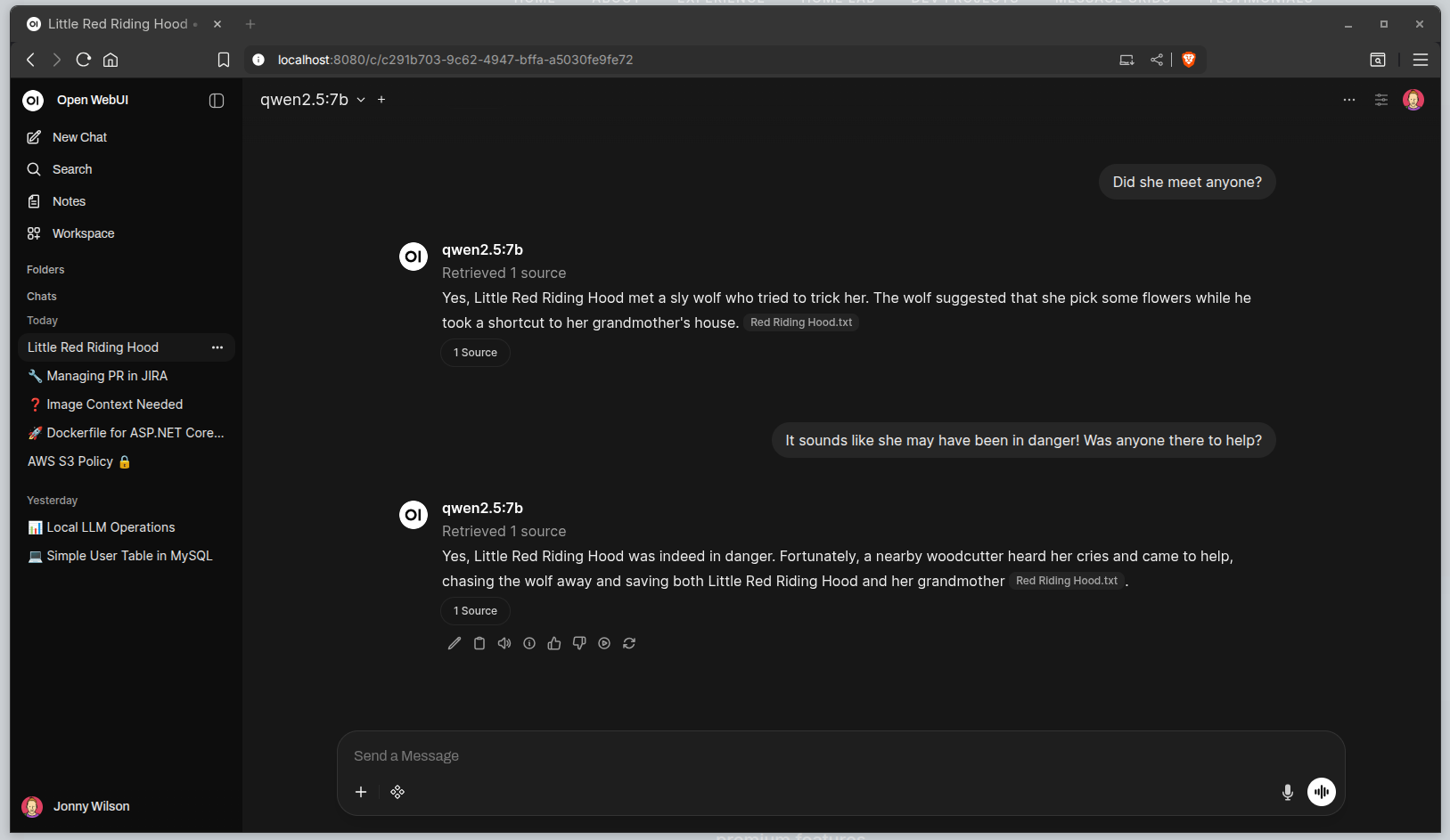

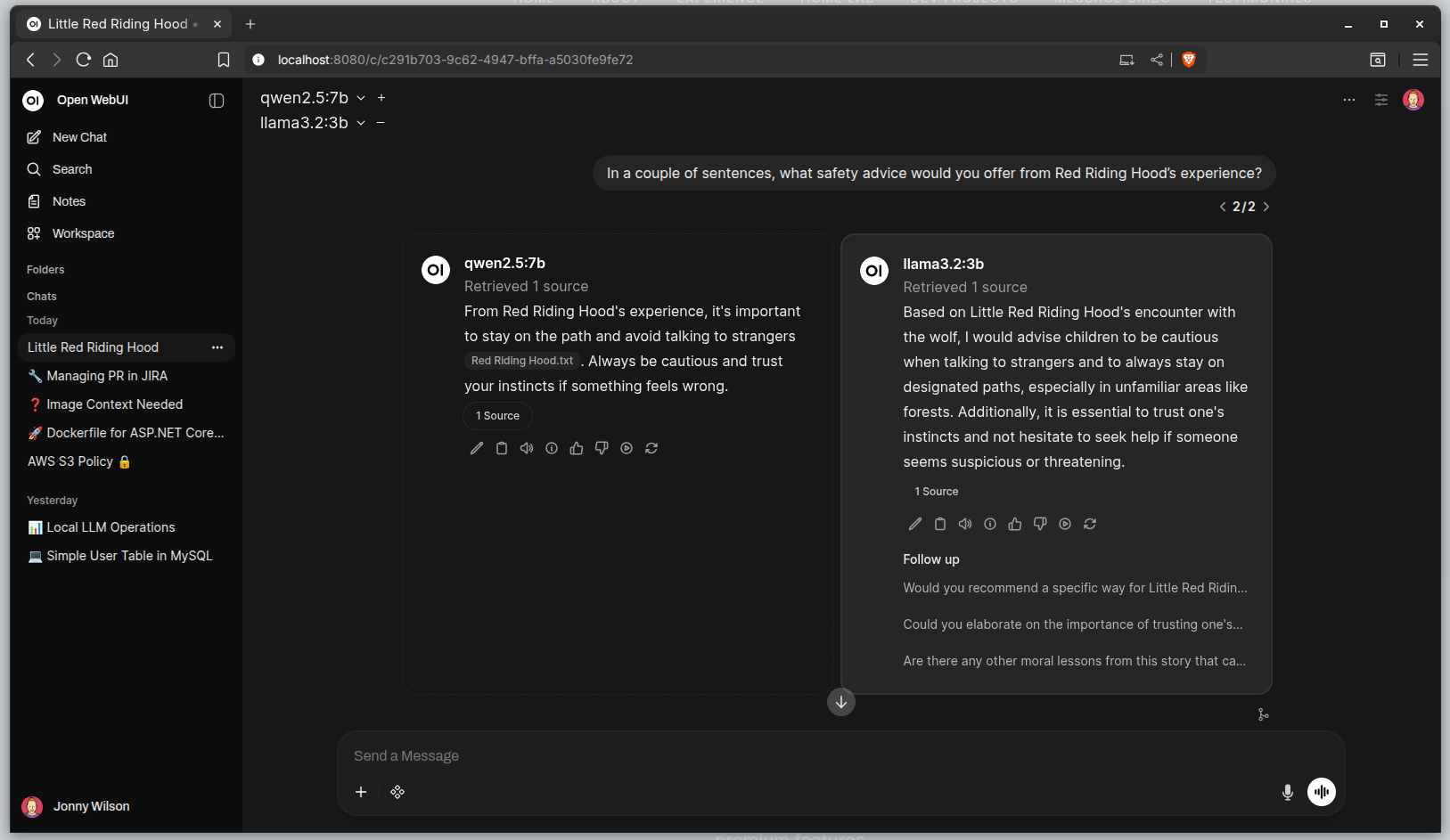

Running Large Language Models (LLMs) locally with Open WebUI offers unparalleled flexibility, allowing me to switch models as needed to achieve the best performance for each specific task – without being locked into a single model for everything or being forced to pay for premium features.

This approach also prioritises my privacy. It keeps my prompts and other data out of large AI datacenters and away from ruthless and untrustworthy data brokers, keeping personal information secure and under my control.

And, with Open WebUI's slick interface modeled like the popular ChatGPT UI, I can seamlessly interact with LLMs in a way that feels familiar and natural, regardless of the model selected.

And for fun, I've taken a qwen model and with some manipulation, I've developed a Chess Playing AI called KnightShift. I play on a physical board and KnightShift plays virtually. The question is, have I beaten it?

Benefits Of Local AI

Key Tools

Current Models

As you explore the world of Large Language Models (LLMs), you've likely come across popular cloud-based services like OpenAI and Gemini. While these platforms offer impressive capabilities, they also come with limitations – and a significant price tag.

With local LLMs, you can bypass the cloud entirely and unlock a more personalised, efficient, and cost-effective approach to AI development. By running models locally on your machine, you gain complete control over your data and workflows, ensuring that your projects are secure, scalable, and tailored to your specific needs.

No longer will you be tied to expensive subscription fees or limited by cloud infrastructure. With local LLMs, you can train, test, and deploy models at a fraction of the cost, while also enjoying faster processing speeds and more accurate results due to reduced latency. It's an empowering experience that will revolutionise your approach to AI-driven projects.

Whether you're a seasoned developer or just starting out with LLMs, local deployment offers a unique opportunity to take control of your data and workflows. Say goodbye to cloud-based limitations and hello to a more personalized, flexible, and cost-effective way of working with AI.

- Written by Llama3:8b in Open Web UI.